Brief History

Years ago, the migration from physical servers to virtual servers brought about a shift to agility that had been unknown previously. No longer were our server workloads tied to physical servers, and as such, migrations, upgrades, expansions, and hardware maintenance were not nearly as significant as they’d been. To vacate physical host, one only needed to place that device in maintenance mode, and all the VM’s residing there would be automatically live migrated to hosts that could accept additional workloads. In the datacenter, uptimes were expanded, and businesses faced far less disruption.

Once again, we’re in the midst of a transformation in IT: the pivot to hybrid cloud with the addition of the steady move of application development to reside in container-based applications, rather than virtual servers.

The path to a digital business hits three major stops:

What is clear is that lean, and agile development and deployment has become top of mind in the minds of IT management across enterprises.

The movement toward a hybrid cloud infrastructure required planning, and reconfiguring. While it became possible to support the virtual infrastructure on these cloud providers’ architecture, some refactoring was required.

At a certain point, the data-center’s investment in virtualization could be leveraged in the cloud provider spaces, allowing for some degree of consistency from on-premises to off-premises. The concept of migrating VM’s from or to these architectures became a possibility, though still somewhat cumbersome. This allowed the reduction of learning curves and allowed for these machines to be migrated back and forth. However, due to the sizes of the virtual discs in use, these migrations, due to latencies and physics could take hours or days to migrate across the WAN.

The adoption of Container Methodologies

The advent of fluid container-based architectures has resolved the reliance on heavy virtual servers and opened the agilities for application migration wherever and whenever the business might choose to do so. However, the data attached to those applications has continued to present a challenge for consistency. The latencies continued to create problems most notably that of application performance.

Roadblocks

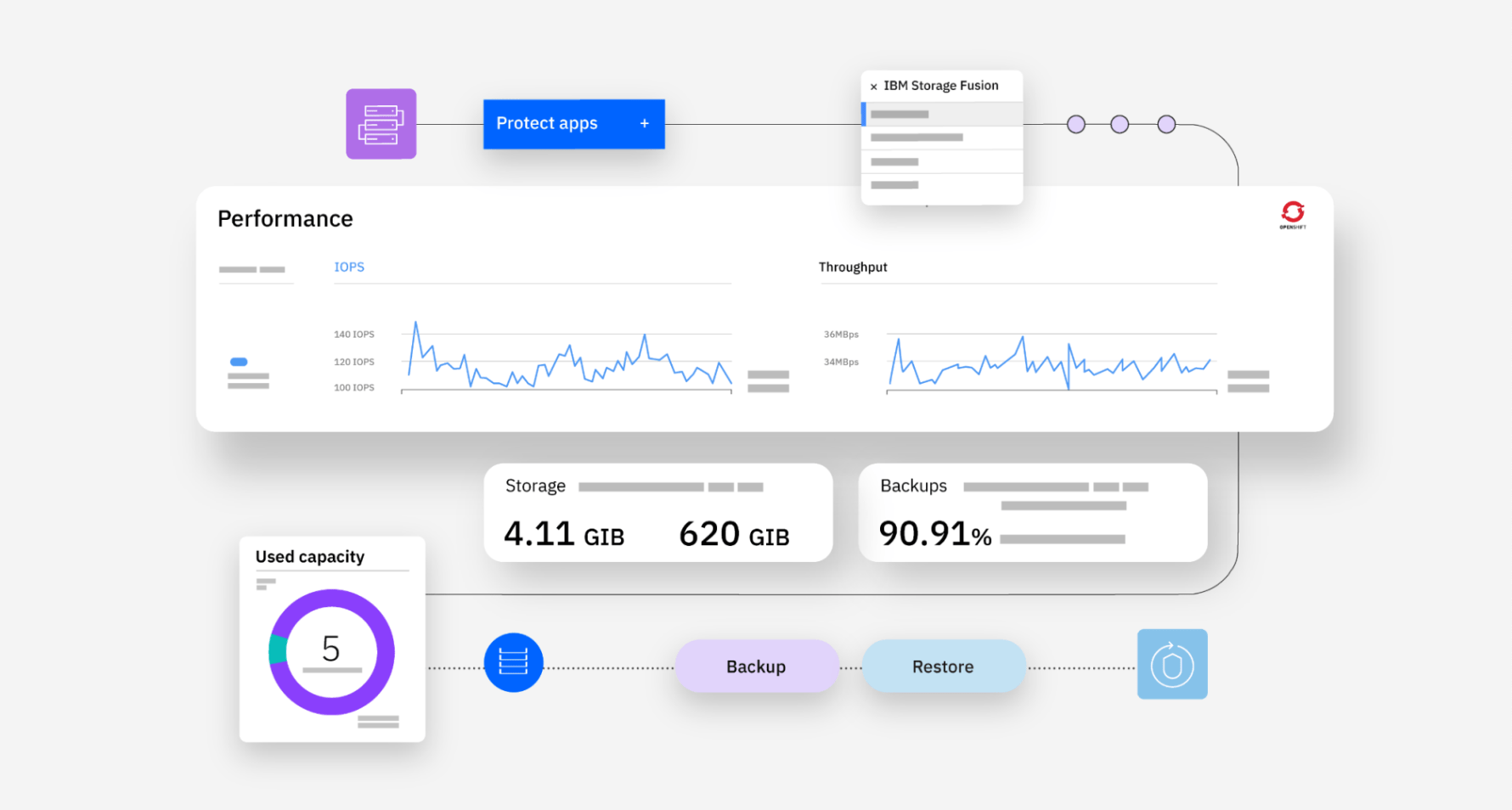

Some of the difficulties regarding the storage elements as it comes to containers relate to the storage pointing to the applications on those containers. Mobility of this data has affected the performance due to latencies, the agility of the applications themselves, and the ability to backup and restore that data. This often leads to discontinuity, and disruptions that can have significant impact on the business. With IBM’s Storage Fusion, these barriers to success have been overcome.

IBM Storage Fusion

IBM’s Storage Fusion solution brings the mobility, caching, and replication required to achieve the true data mobility and agility required in today’s Kubernetes environments. With this technology, the picture becomes complete.

o Benefits include:

● Application Data Portability

● Application Flexibility – by way of connectivity to File/Object (S3 and NFS)

● Security

● Resiliency

● Data Protection

● Dynamic expansion – Any connected storage, expansion or sharing enabled

● Backup

All functionality is transparent to the user, no complexity, no intervention by storage administrator is needed, truly dynamic deployment. Consumption is easily achieved, fluid, and without overhead.

As Red Hat OpenShift hybrid cloud application footprints continue to expand both in scale and reach, we have seen organizations look to enterprise-grade data services to support these mission-critical workloads. With the introduction of IBM Storage Fusion, Red Hat OpenShift customers can deploy geo-dispersed hybrid cloud infrastructure with enterprise ready data protection, mobility, scalability, and data discovery – all built in,” said Brent Compton, senior director, Data Foundation Business, Red Hat. “Additionally, IBM Storage Fusion includes Storage Fusion Data Foundation Essentials, providing tight data services integration with Red Hat OpenShift. This combination of Red Hat OpenShift, Storage Fusion Data Foundation and IBM Storage Fusion represents an innovative leap forward in maximizing the value of application containers.”

Summary

Containerization leads to the expectation of agility, data protection, backup, data-mobility… The end state of Storage Fusion is to truly separate the user from the infrastructure, in the context of the work environment. Consumption of the K8’s environment is transparent to the application admins to consume as needed their platform. Using automation. Consumable services for Kubernetes use, automatically provisioning the resources and we’re using policies to protect that data and ensuring the end user to the point where they’re getting those resources they require without additional legwork. Continually, on the fly, adjusting the environment, meanwhile leveraging existing assets as usable components in this container environment.

Written by Matthew Leib Product Marketing Manager for IBM Storage Fusion

For more information visit : https://www.ibm.com/products/storage-fusion